Paper Written For Publication in Proceedings of the I Mech E (Part B)

A Computer Tool for Assessing the Education and Training Requirements of ERP System Users / MRP II Production Planning and Control System Users

Austen K Jones

A K Kochhar

M W M Hollwey

Abstract

The problem of accurate specification of the education and training needs of production planning and control system users is important if the users are to be competent and the system implementation is to be successful.

This paper outlines an innovative approach and the associated computer-based approach for specifying educational needs of production planning and control personnel. The approach determines a person’s job tasks (Activities) and specifies the person’s Required Knowledge profile with reference to their tasks. The Required Knowledge profile can then be compared to the individual’s Actual Knowledge profile with the differences between the two being the specification of education requirements.

The amount of information which needs to be collected and stored and the computer’s inherent speed of data processing makes it desirable, indeed necessary, to computerise the approach. The logical structure and use of the resulting tool is described.

1 Introduction

User education and training are crucially important aspects of the successful implementation and operation of a production planning and control system (PPC) [Muhlemann et al 1992]. Ensuring that users receive the education and training that they need in order to be competent in their work roles is important to implementation success [Udo & Ehie 1996, Duchessi et al 1988] yet is an area that has received little attention from the academic world and still remains a real problem.

This paper describes a framework for addressing this education and training problem. The structure of the education and training requirements specification framework, and the way in which it can be used, are described using the generic manufacturing resource planning (MRP II) system as an example.

2 Education & Training Requirements Specification Approach

The most prevalent method of determining who is taught what in the context of PPC system implementation is to allocate standard courses, for instance as offered by the PPC seller, to people in the manufacturing company on the basis of their job titles [Jones et al 1998, Roberts 1986, Iemmolo 1994]. This approach is flawed as different people in different companies will invariably have different education needs and if they are not determined at the outset then it will not be known whether the courses they attend will fully satisfy those needs. Camp et al (1986) liken performing training without a needs analysis to:

"a medical doctor performing surgery based only on the knowledge that the patient doesn't feel well. The surgery may correct the problem but the odds are considerably against it."

A framework for specifying education and training requirements has been developed based on the following hypothesis [Jones et al 1998, McClelland 1989, Bee & Bee 1994, Reid & Barrington 1997]:

1. People perform and are responsible for specific tasks (Activities) as part of their jobs

2. In order to perform a particular Activity competently a person needs to know certain information (or Concepts)

This leads to the idea that if a person’s Activity / task profile can be determined and the Knowledge or skills profiles associated with each of those Activities are defined then that person’s education and training pre-requisites to effective performance in the workplace can be specified. If these pre-requisites are compared to the individual’s Actual knowledge and skills profiles then the shortfall represents his need for education / training.

Hence, the developed approach consists of a list of discrete job activities that are each linked to an appropriate knowledge profile, i.e. a list of Concepts each having a required Knowledge level. The Activities and Concepts contained in the framework are manufacturing related.

The framework contains over 1,200 relationships between more than 140 Activities and 300 Concepts. These Activities, Concepts and relationships were compiled initially with reference to the available literature after they had been extensively discussed by the research group [Cox et al 1995, Vollman et al 1984, Greene 1987, Muhlemann et al 1992, Kochhar et al 1994, Howard et al 1998, Technical Committee M/136 1975, Wallace 1985]. The tentative framework was built on further and validated by industrialists from four MRP II using manufacturers. The industrialists completed questionnaires commenting on the proposed lists of Activities, Concepts and relationships and also added them where they were found to be lacking.

Four-group classification systems were developed to categorise individual's Involvement levels with the Activities and their Knowledge levels of particular Concepts. Four levels were decided upon as this was considered to be the optimal compromise between having sufficient detail so as to make the requirements specification accurate and relevant and having too many levels such that the differences between adjacent levels were unacceptably vague. The four Involvement levels are outlined in Table 1 and the four Knowledge levels are outlined in Table 2.

|

None |

No involvement in the Activity |

|

Limited |

Being involved in an Activity indirectly or in a superficial sense. |

|

Some |

Being involved in an Activity which does not constitute a major part of the person's job description. The involvement may be in some sort of assisting or overseeing role. |

|

Detailed |

Being actively involved in an Activity, generally on a day-to-day basis. The Activity is likely to be a significant part of this individual's job description. |

Table 1: Involvement Levels

|

None |

No knowledge required of the Concept. |

|

Awareness |

Conceptual background information whereby an individual has some knowledge of the topic under consideration. |

|

Overview |

The level of understanding that a person requires to effectively manage the day to day activities. (This may be higher level conceptual information as opposed to a thorough understanding of the topic.) |

|

Detailed knowledge |

The level of understanding required of a particular topic by an individual whose job activities necessitate a thorough understanding of the topic. |

Table 2: Knowledge Levels

2.1 Need for Computerisation of the Approach

In order to analyse an individual's education and training requirements a significant amount of data needs to be collected [Boydell & Leary 1996]. Detailed information must be gathered regarding the tasks that the individual performs as part of the individual's role within the company, also data regarding the person's existing knowledge profile of pertinent facts needs to be elicited. This information is collected using two questionnaires; the first determines the PPC-related activities that the person is involved with and the second determines the individual's knowledge profile of the relevant concepts. Questionnaires provide a useful structure which benefits the needs specification process [Reid & Barrington 1997].

The questions in the second questionnaire that a person completes are dependent on the answers that they gave to the first. This tailoring process, while quite mechanistic, would be prohibitively time consuming if it was performed by hand / in person. Tailoring the second questionnaire by hand would also mean that there would be a significant time delay between completion of the first and second questionnaires and in most instances this would be unacceptable.

A person using a computerised version of the education requirements specification approach would complete the first questionnaire by entering the relevant answers where prompted and the computer tool would, as far as the user would be concerned, instantaneously analyse the answers and tailor the second questionnaire accordingly. In this way the user could complete the two questionnaires back-to-back.

Similarly, the analysis of the questionnaires and comparison of a person's actual knowledge profile with the suggested knowledge profile would be a time consuming process as indeed would the formatting and generation of the reports detailing the results of the analysis. For these reasons computerisation was considered to be crucial if the approach was to be used in a practical time-scale and to be financially viable.

The fact that computers are inexpensive, common in the workplace and that the levels of computer-literacy of people in general are continuing to increase led to the decision to computerise the approach to specifying education needs. The approach was then computerised using the MS Access database environment.

3 Computerisation of the Approach and Population of the Framework

Prior to the computerisation of the requirements specification framework it was necessary to decide the key attributes that an effective computerised tool would need to incorporate.

3.1 Functional Requirements of the Computerised Tool

Prior to computerisation of the developed education & training requirements specification approach a functional specification of the computerised tool was developed. After a number of iterations it was agreed that the system should have the following features:

· The system should be easy to use and operate so that all but a minimal amount of training would be required by potential users.

· The system should not allow any unauthorised users to modify or delete any parts of the model, i.e. activities, concepts, relationships etc.

· The system should be made as fool-proof as is practical, i.e. it should incorporate error checking routines and disallow the input of invalid data.

· The system must facilitate the swift input of information into it.

· The tool must be able to store all data that is entered into it.

· The tool must be able to perform the necessary data processing and analysis.

· The tool must be able to output results in an understandable and useful format.

· In addition to normal textual reports, the output should be presented in a graphical format.

· The tool must be flexible enough to enable its contents to be modified as necessary at a subsequent date.

Once the functional specification of the proposed tool had been agreed the process of converting the paper-based education & training requirements specification approach into a viable computer tool could then be undertaken.

3.2 Computerisation of the Approach

It was necessary to select a development tool / language / environment that would facilitate the easy and quick development of the education & training requirements specification tool having the required functionality. Hence, the main criteria for development environment selection were:

· Ability to handle large amounts of data

· Ability to process and query information from collected data

· Ability to display / output results in a useful way

· Ease with which a user friendly interface could be developed

· Ease with which the tool could be modified at a later date

· Ideally be inexpensive and widely available

Microsoft's Access '97 was used as the development environment due to its meeting the above criteria better than other alternatives that were considered, i.e. spread sheet and 4GL. The structure of the resulting tool is described in the following section of this paper, the following sub-section describes how the computerised framework was populated.

3.3 Population of the Framework

Once the framework for specifying education requirements, as outlined in section 2, had been computerised it was then necessary to populate the framework with relevant and meaningful information, i.e. MRP II Activities and Concepts and the associated links between them. This initially involved a thorough analysis and synthesis of the available literature and latterly involved gathering information from a number of different companies.

The contents of the framework were then further populated with information gathered predominantly by questionnaire from 33 experienced PPC system users from 4 different good-practice MRP II companies. The information gathered consisted of Activities, Concepts and relationships with associated Knowledge levels, such that after this stage of the research the framework contained Required knowledge profiles under each Activity. The following section outlines the computerised tool that was developed.

4 Computerised Tool for Specifying Education Requirements

The previous section of the paper outlined the process of converting the approach that had been developed for specifying the education requirements of people in companies attempting an PPC system implementation to a computer-based application. This section outlines the structure of the application and describes how to navigate round the tool, the functions that it can perform and how the tool can be used.

4.1 Structure & Usage of the Computerised Tool

The application is navigated via “forms”. Forms are interactive windows that can contain information, allow information to be entered and selections to be made, i.e. which screen you want to go to next. “Reports” on the other hand are for displaying and printing preformatted information only, i.e. print previewing a questionnaire.

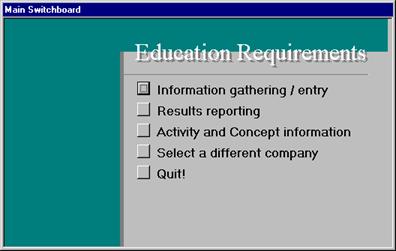

The summarised outline of the screens and the options each contain are given in Figure 1, which shows the overall structure of the application. The box outlined in bold is the “switchboard” which, as its name implies, is the application’s central screen via which the application can be navigated. The options offered by this screen are:

· Data entry: for instance information about the company or an individual

· Results reporting: for instance to see the report detailing the Activities a selected person is involved with

· Activity / Concept details: to see information regarding the Concepts the application comprises

· Select a different company: this option takes you back to the previous screen so that information about a different company can be entered or viewed

· Quit: to close all the application’s windows and leave the program

These are discussed further below.

On opening the application the user sees a window giving introductory information. There is the option for the user to view more information if they would like to otherwise, once the screen has been accepted (the “OK” button has been clicked), the company whose data is to be viewed / entered is selected (double click company name). Once the company has been selected the user is presented with the “switchboard”, see Figure 2.

The options available on the switchboard are:

· Data entry

· Results reporting

· Activity / Concept details

· Select a different company

· Quit

The last two options have already been outlined and require no further discussion. The remaining three options will be described in greater detail in the following sub-sections.

4.2 Data entry

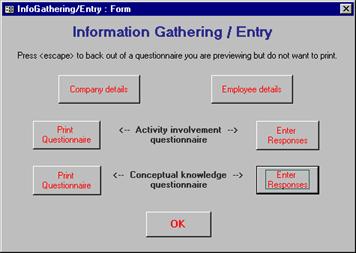

After selecting the Data entry module from the switchboard the user is presented with a screen of options, see Figure 3, via which the following information can be viewed and edited:

· Company details: including address, phone number, turnover, number of employees, main products, manufacturing control system currently in use, amount of money allocated to staff education and training and so on.

· Employee details: for instance, name, job title, phone number and years in service.

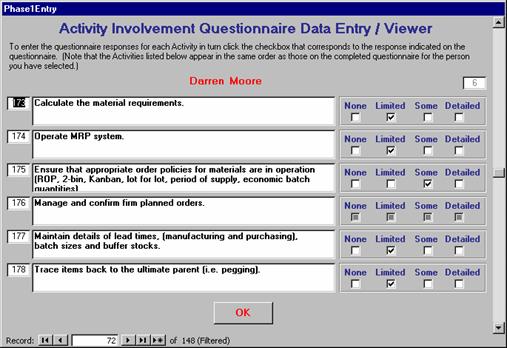

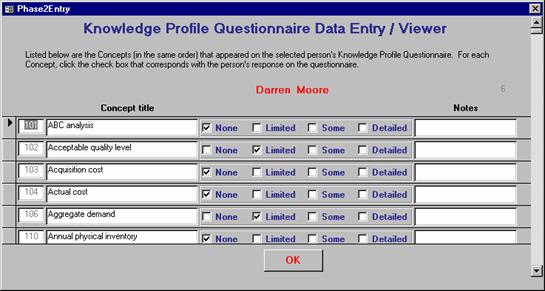

· Activity involvement questionnaire (Figure 4): provides the list of Activities that are in the framework and requires that the user places a check in the box that most appropriately describes his level of involvement with the Activity under consideration.

· Conceptual knowledge questionnaire (Figure 5): consists of a list of the Concepts that are relevant to the user’s job role (as defined by his responses to the Activity involvement questionnaire). The user places a check in the box that best describes the Knowledge level he has of each Concept.

As well as the above the Data entry module also allows the user to view or print the Activity involvement questionnaire and the Conceptual knowledge questionnaire in Report format.

4.3 Results reporting

This module is the most important module of the system from the user’s point of view. This is because it is here that the results of the education requirements specification analyses can be viewed and printed.

Selecting the Results reporting module opens a form containing two options, these are Activity profile information or Knowledge profile information. Selecting the Activity profile option opens a window listing the people whose details are in the database. When a person’s name is double-clicked the Activity involvement report is opened containing their information. As with all reports, the person’s Activity involvement report can be viewed or printed (not edited).

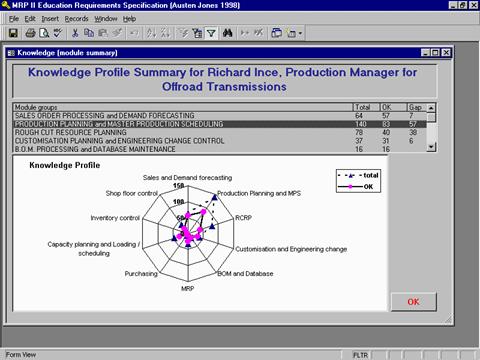

If instead of selecting the Activity involvement report the Knowledge profile information was selected then a different procedure is invoked. As before, a screen is opened containing the names and details of the people in the database for a selection to be made. Once the person’s name has been highlighted the user can either view the information interactively via forms or the information can be viewed in a printable format as a report. The report groups the results at three separate levels as follows:

1. Summary level statistics: comprising an overall “score” for the individual and details about the number of Activities and Concepts which the person was associated with and of these how many the person’s knowledge level was less than the suggested knowledge level.

2. Module level: listed under each Module are the Activities in that Module that the person is involved with. Also indicated next to each Activity is whether there were any Concepts associated with the Activity for which the person’s knowledge level was below the suggested knowledge level.

3. Activity level: under each Activity the person is associated with are the Concepts relevant to that Activity. Listed with each Concept are the person’s Actual knowledge level of it and the Suggested knowledge level – it is at this level that Knowledge gaps in specific Concepts are highlighted.

For reference purposes all of the Concepts contained in the report are listed with their definitions at the end of the report.

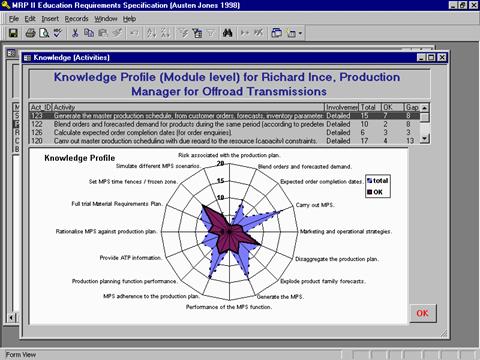

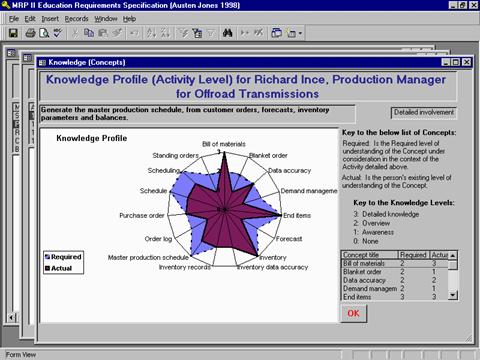

Alternatively, the results of the education requirements analysis, for a particular individual, can be viewed interactively via a number of specially designed forms. Like the structure of the report, there are three different levels of forms. At the highest level the results of the education requirements analysis are presented at PPC system Module level (i.e. grouped Activities). Information pertaining to a particular Module (group of Activities) can be seen by double-clicking the Module’s title, in this way it is possible “drill down” to see information at a greater level of detail. Similarly, selecting a particular Activity from the chosen Module invokes a further form at a lower level of detail, i.e. the Concepts and associated Knowledge levels that are relevant to the Activity are displayed. Figures 6, 7 and 8 illustrate this ability to drill down through the results to explore the underlying detail.

The information contained in the report and displayed in the forms aside from being presented in textual format is also presented graphically in the form of radar diagrams. These diagrams are an effective method of presenting the text-based information in a speedily interpretable manner [Thorsteinsson 1997].

Figure 6 shows the education requirements at the highest level of aggregation. The screen shows a list box listing the MRP II system Modules and the radar diagram below it. On the right of the list box are three columns of numbers, the content of these columns relate to:

· Desired: indicates the total number of Concepts that the person requires knowledge of for the Activities in the Module

· OK: indicates the number of Concepts in the module of which the person has the suggested level of understanding

· Gap: indicates the number of Concepts in the module that the person may benefit from more knowledge of

The radar diagram presents this information graphically, the size of the gap between the two plots, i.e. Desired profile and OK profile, being related to the size of the education task. As radar diagram in Figure 6 shows the largest knowledge gap exists for the Activities contained within the ‘Production Planning and Master Production Scheduling’ PPC system modules. Conversely, the knowledge gap that exists for the ‘Sales Order Processing and Demand Forecasting’ modules is much smaller. The user is able to drill down on a module to view the source of the knowledge gap. Figure 7 shows the result of drilling down on the Production Planning and MPS module group so that the reported knowledge gap can be explored in more detail.

As for Figure 6, the screen shown in Figure 7 consists of two main areas, i.e. a list box area and a radar diagram. The information displayed in each area in this screen is at the MRP II Activity level rather than at Module level as was the case in Figure 6. Figure 7 shows the varying sizes of knowledge gaps that are associated with each Activity in the module. As can be seen, some Activities have no knowledge gap associated with them, i.e. ‘Set MPS time fences / frozen zone’. Conversely, sizeable knowledge gaps are reported for a number of Activities, such as ‘Generate the MPS’ – drilling down on this Activity displays the more detailed results shown in Figure 8.

The radar diagram in Figure 8 shows the Required and Actual Knowledge level for each Concept that is relevant to the Activity. The figure shows knowledge gaps for individual Concepts in the context of the selected Activity, for instance, gaps are indicated for the Concepts ‘Forecast’ and ‘Blanket order’. The radar diagram also shows that for a number of Concepts the Actual and Required Knowledge levels are the same, i.e. ‘Data accuracy’ and ‘Purchase order’ and also shows an example of where the individual’s knowledge of a Concept is greater than is actually required, i.e. ‘Bill of material’. By viewing the results at this level of detail an individual’s strengths and weaknesses in the context of the Activities he performs can be quickly assimilated.

The radar diagram facilitates a fast and straight forward determination of the person's key areas of weakness. The list box to the right of the radar diagram contains the same information as the radar diagram but is in the format of a list of Concepts and associated Required and Actual Knowledge levels.

Whichever way the results are viewed, i.e. on-screen or in the printed report, the person’s knowledge weaknesses / needs are displayed in a concise manner and are immediately obvious to the reader. It is the ease of interpretation of the results coupled with the ability to enquire as to the source of a particular education need that are the key strengths of the computerised tool.

4.4 Activity / Concept details

This module of the computerised application is essentially for reference and enables the bones of the education requirements specification model, i.e. the Activities & Involvement levels and the Concepts & Knowledge levels, to be viewed. On selecting this module from the switchboard a form offers the user four options, namely:

1. Concept information: all of the Concepts contained in the model are displayed here with their associated definitions. The Concepts can be sorted alphabetically or a particular character string can be searched for.

2. Involvement levels: the four Involvement levels are described and defined

3. Knowledge levels: similarly, the four Knowledge levels are defined

4. Activity information: selecting this option displays a form listing the nine MRP II Module groups. Selecting one of these Module groups lists all of the Activities, with explanations, contained in that group. By double-clicking an Activity the user is able to drill down further to see the Concepts associated with that Activity.

5 Discussion

The previous section described the structure and in-built functionality of the computerised tool for determining the education requirements of personnel using MRP II systems. This section discusses the issues of accuracy and effectiveness of the requirements specification tool and also how the tool should be used to offer advantage during system implementation projects.

5.1 Accuracy & Effectiveness of the Computerised Tool

The accuracy and effectiveness of the computerised version of the education requirements specification tool rely on a number of different issues. These are discussed below with the associated measures and steps taken to ensure that the accuracy and effectiveness of the tool were maximised.

Framework contents

The accuracy and completeness of the activities and concepts contained in the model and the appropriateness of the relationships between them. The contents of the model were tested and validated by a total of over 60 people working in industry from 12 different manufacturing companies. Four of these companies were involved during the developmental stage of the research, nine of the companies were used to test the framework and the education requirements specifications that it yielded, and the computer tool described in this report was tested on-site in a further company.

Data integrity and processing logic

For the tool to yield accurate specifications it is not enough that the contents of the tool are accurate, the underlying logic and data processing algorithms used to determine the specifications must be functioning correctly and predictably. For instance, the queries in the tool must actually process data in the way they were meant to and extract the correct information as instructed, that data is not lost or corrupted and so on. Data integrity and processing logic issues were explicitly tested for throughout the development of the computerised tool. The tool was validated using test data, for instance, by comparing the results output by the tool with those predicted by manually processing the test data.

Results / output reports

To be effective correct and useful information must displayed in the hardcopy report in a structured way and at the right level of detail. The results / output of the tool were fedback to 28 people from 7 different manufacturers who were subsequently asked to complete a questionnaire regarding the output of the tool and the approach to education requirements specification. The vast majority of the people considered the approach to be valid, the contents of the framework, i.e. Activities and Concepts, to be accurate, comprehensive and at the right level of detail, and the education requirements specification reports to be well presented and useful.

User interface and functionality

The tool was demonstrated to a variety of people periodically throughout its development so that feedback regarding the structure and "feel" of the application, rather than the accuracy of its content per se, could be collected and the application updated in-line with the elicited comments. In this way the necessary features and functionality were built into the tool in iterative developmental cycles. The resulting feedback was used to further enhance the tool.

"Acid" test

The discussion above has indicated that the framework for specifying education needs is valid, accurate, useful and that the computerised approach follows the intended processing logic, outputs accurate and useful specifications and contains the requisite functionality. These aspects of the tool were, to a large, degree determined independently of each other and were established by different groups of people. The "acceptance" stage of testing was concerned with validating all aspects of the computerised tool and made use of five production personnel from a racing car manufacturer.

Each of the five people were assessed on site at their desks using a laptop computer with the computerised tool preloaded on it. The assessment (of the tool) started by briefly, a few minutes typically, introducing the person to the computerised requirements specification tool, the layout of the screens, how to navigate round the tool and so forth. This was followed by the individuals completing the Activity Involvement questionnaire, i.e. Figure 4, and then the Conceptual Knowledge questionnaire, i.e. Figure 5. The questionnaires took between 30 minutes and 1 hour to complete. The results were then interrogated by the individuals using the drill down feature illustrated by Figures 6, 7 & 8. The individual's were also given a hardcopy report of the education specifications.

Semi-structured interviews were then carried out to assess how the tool and the education specifications had been received by the personnel who had used it. The interviews showed that the overall approach to requirements specification was valid and effective. While the education specifications were generally considered to be accurate concern was expressed over the possibility of problems associated with terminological issues and also the fact that the results could only be as accurate as the answers that were given on the questionnaires. The comment was also made that some of the requirements were inaccurate due to some Concepts being specified that were not relevant to their particular operations, this was viewed as a strength making the tool more generally applicable rather than a problem per se. However, it does imply that filtering Concepts irrelevant to the company under consideration from the super-set of Concepts would increase the relevance and accuracy of the needs specifications that the tool produces in the context of the particular company.

Three of the five people considered the Activities to be at the correct level of detail for the purposes of requirements specification, the responses from the remaining two were at odds with each other, one suggested that that the Activities were too detailed while the other suggested that more specific Activities would be better.

The tool itself was well received, all of the five testers said that it was easy to use, logically structured and straight-forward to navigate with one person saying that the tool had a "professional feel" and that it was very reliable. Overall the tool was considered to be effective in its aim of being an accurate and viable solution to the education requirements specification problem.

6 Conclusions

Despite the need for a tool to accurately and efficiently determine the education and training needs of PPC system users there has been little research in this area. The framework and the associated computer tool described in this paper sought to address that gap in the literature which represents a real problem. The original research project was concerned with developing an approach for effectively specifying the education requirements of people in manufacturing companies attempting to implement manufacturing resource planning. Due to the amount of the data that needs to be collected and the size of the data processing task required to make an accurate and sufficiently detailed assessment of the education needs of individuals it was necessary to computerise the approach and make it viable.

The results of the ‘acid’-testing of the tool on site in a manufacturing company showed the approach to specifying education needs to be a valid and generic one which enabled requirements to be accurately assessed in an effective way and at the right level of detail. Importantly, the approach was also shown to be better than existing methods of needs specification. However, it was also highlighted that the list of Concepts relevant to each Activity, in order to be generic, included some not relevant to the organisation it was being tested in. Hence, filtering the super-set of Concepts would help to improve the relevance and accuracy of the resulting needs specifications.

The authors are grateful to the Engineering & Physical Sciences Research Council (EPSRC) for the award of a research grant that made it possible to undertake this work.

7 References

Bee & Bee 1994

Bee, F. & Bee, R., (1994), Training Needs Analysis and Evaluation, Institute of Personnel Management, London

Boydell & Leary 1996

Boydell, T. & Leary, M., (1996), Identifying Training Needs, Institute of Personnel and Development, London

Camp et al 1986

Camp, R., Blanchard, P. & Huszczo, G., (1986), Toward a More Organizationally Effective Training Strategy and Practice, Prentice Hall, Englewood Cliffs, N.J.

Cox et al 1995

Cox, J., Blackstone, J. & Spencer, M., (1995), APICS Dictionary, APICS Inc., Falls Church, VA

Duchessi et al 1988

Duchessi, P., Schaninger, C., Hobbs, D. and Pentak, L., (1988), "Determinants of success in implementing material requirements planning (MRP)", Manufacturing and Operations Management, pp. 263-304.

Greene 1987

Greene, (1987), Production And Inventory Control Handbook, McGraw-Hill Inc., New York

Howard et al 1998

Howard, A., Kochhar, A. & Dilworth, J., (1998), "Functional requirements of manufacturing planning and control systems in medium-sized batch manufacturing companies", Integrated Manufacturing Systems, Volume 10 Number 3

Iemmolo 1994

Iemmolo, G., (1994), "MRP II education: a team-building exercise", Hospital Material Management Quarterly, Volume 15 Issue 4, pp. 33-39.

Jones et al 1998

Jones, A; Kochhar, A. & Hollwey, M, (1998), "Education and training requirements specification for implementation of manufacturing control systems", Strategic Management of the Manufacturing Value Chain, Edited by: Bititci, U. & Carrie, A., Kluwer Academic Publishers, Boston

Kochhar et al 1994

Kochhar, A., Davies, A. & Kennerley, M., (1994), "Performance indicators and benchmarking in manufacturing planning and control systems", Proceedings of the OE/IFIP/IEEE International Conference on Integrated and Sustainable Production - Re-engineering for Sustainable Industrial Production, Lisbon, Portugal, May 1997, pp. 487-494.

McClelland 1994

McClelland, S, (1994), "Training needs assessment data gathering methods: Part 1, Survey Questionnaires", Journal of European Industrial Training, Vol 18 No 1, pp. 22-26.

Muhlemann et al 1992

Muhlemann, Oakland & Lockyer, (1992), Productions and Operations Management, Pitman Publishing, London

Reid & Barrington 1997

Reid, M. & Barrington, H., (1997), Training Interventions, Institute of Personnel and Development, London

Roberts 1986

Roberts, B., (1986), "Education and training programs to support MRP implementations", Material Requirements Planning Reprints, APICS Inc., pp. 85-104

Technical Committee M/136 1975

Technical Committee M/136, (1975), Glossary of Production Planning and Control Terms, British Standards Institute, London

Thorsteinsson 1997

Thorsteinsson, U., (1997), "A virtual company for educational purpose", Proceedings of the 32nd International MATADOR Conference, Manchester, pp.221-226

Udo & Ehie 1996

Udo, G. & Ehie, I., (1996), "Critical success factors for advanced manufacturing systems", Computers & Industrial Engineering, Volume 31 Issue 1-2, pp. 91-94

Vollman et al 1992

Vollman, T., Berry, W. & Whybark, D., (1992), Manufacturing Planning And Control Systems, Irwin Inc., Homewood, IL

Wallace 1985

Wallace, (1985), MRP II: Making It Happen, Oliver Wight Limited Publications, New York

Figures

Figure 1: Structure of the computerised tool around the main Switchboard form

Figure 2: The Switchboard screen

Figure 3: Data Entry options screen

Figure 4: Activity involvement questionnaire screen

Figure 5: Conceptual knowledge questionnaire screen

Figure 6: Screen showing education requirements aggregated to MRP II system Module level

Figure 7: Screen showing education requirements aggregated to MRP II system Activity level

Figure 8: Screen showing education requirements at Concept level

4ETO:

4

Contact 4

ERP:

ERP Software Modules

ERP Business Processes

ERP Dictionary by Category

Papers & Articles:

Index of Articles

ERP Training

CIM Seminar

ERP TNA Development

ERP Education

Computerised ERP TNA

Operations Improvements

Change Management

Production Planning Systems

Supply Chain Optimisation

S&OP and SCM

SCO Software

micro SCM Implementation